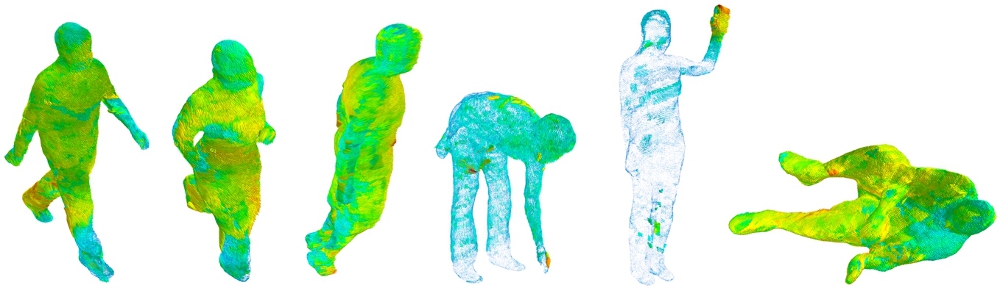

Database description:

The dataset features a total of 5724 annotated frames divided in three indoor scenes.

Activity in scene 1 and 3 is using the full depth range of the Kinect for XBOX 360 sensor whereas activity in scene 2 is constrained to a depth range of plus/minus 0.250 m in order to suppress the parallax between the two physical sensors. Scene 1 and 2 are situated in a closed meeting room with little natural light to disturb the depth sensing, whereas scene 3 is situated in an area with wide windows and a substantial amount of sunlight. For each scene, a total of three persons are interacting, reading, walking, sitting, reading, etc.

Every person is annotated with a unique ID in the scene on a pixel-level in the RGB modality. For the thermal and depth modalities, annotations are transferred from the RGB images using a registration algorithm found in registrator.cpp.

Citation:

Palmero, C., Clapés, A., Bahnsen, C., Møgelmose, A., Moeslund, T. B., & Escalera, S. (2016). Multi-modal RGB–Depth–Thermal Human Body Segmentation. International Journal of Computer Vision, pp 1-23.

Data set:

A readme file describing the data set can be found here.

Images can be downloaded from this link: TrimodalDataset.zip

The dataset is also available at Kaggle.